Mastering Reinforcement Learning: Unraveling Its Power and Potential

Reinforcement Learning: Unveiling the Power of Adaptive Learning

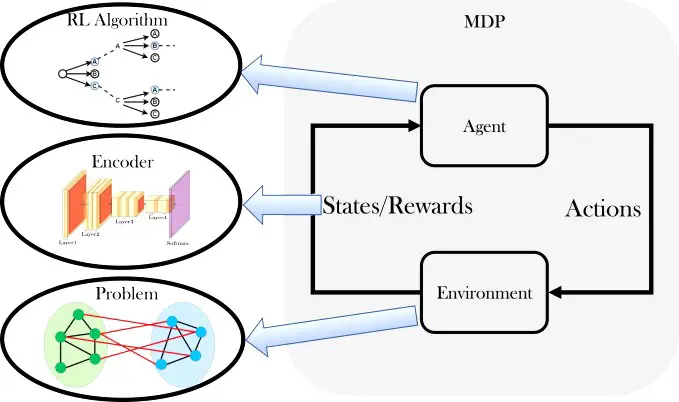

Reinforcement Learning (RL) stands as a pivotal concept within the realm of machine learning. It mimics the way humans learn by trial and error, making decisions through experiences to maximize cumulative reward. Essentially, it revolves around an agent, a learner, or a decision-maker, interacting with an environment to achieve specific goals.

Core Components of Reinforcement Learning

Agent:

The agent in RL encompasses the learner or decision-maker that navigates through the environment. It utilizes actions to impact the environment and perceives feedback, and learning through these interactions.

Environment:

This constitutes everything the agent interacts with while learning. The environment responds to the agent’s actions, providing feedback in the form of rewards or penalties.

Actions:

Actions refer to the decisions or moves made by the agent within the environment. Each action affects the subsequent state and influences the rewards received by the agent.

Rewards:

Rewards serve as feedback or reinforcement for the agent’s actions. They define the success or failure of the agent’s decisions and guide it toward achieving the desired goals.

Applications of Reinforcement Learning

Gaming Industry:

Reinforcement Learning has made remarkable strides in gaming, notably showcased in the development of game-playing AI. From classic games to complex strategic simulations, RL has enabled AI to master various gaming scenarios.

Robotics and Automation:

In robotics, RL facilitates adaptive learning for robots, allowing them to navigate unknown terrains, manipulate objects, and execute complex tasks in dynamic environments.

Finance and Trading:

Financial sectors leverage RL for decision-making processes. It aids in optimizing portfolios, risk management, and algorithmic trading, adapting strategies based on market fluctuations.

Reinforcement Learning Algorithms

Q-Learning:

Q-learning, a fundamental RL algorithm, enables agents to make decisions in a Markov Decision Process (MDP). It learns optimal actions by updating a Q-table based on actions and their resulting rewards.

Deep Q-Networks (DQN):

DQN merges Q-Learning with deep neural networks, allowing RL in complex environments. It employs neural networks to approximate Q-values, enhancing learning in more intricate scenarios.

Policy Gradient Methods:

These methods directly learn the optimal policy without necessitating a value function. Algorithms like REINFORCE and Actor-Critic fall under this category, excelling in continuous action spaces.

Challenges and Future of Reinforcement Learning

Sample Inefficiency:

Reinforcement Learning often requires extensive data and exploration, posing a challenge in scenarios where real-world interactions are costly or time-consuming.

Ethical Implications:

As RL advances, ethical concerns surrounding its applications arise, particularly in autonomous systems and decision-making processes impacting human lives.

Continual Advancements:

The future of RL holds promises of addressing existing challenges. Innovations in algorithms, such as meta-learning and multi-agent systems, aim to overcome limitations and broaden RL’s applicability.

Conclusion

Reinforcement Learning represents a paradigm shift in machine learning, offering a mechanism for adaptive learning and decision-making. Its applications span diverse fields, promising innovations and breakthroughs. Despite challenges, continual advancements pave the way for an increasingly robust and versatile RL landscape.